Lifting Spreadsheet Solutions to the Power Platform

We will redesign and migrate spreadsheet solutions to run on the Microsoft Power Platform. Within this process we will identify extended capabilities for integration, optimization and further automation.

We will redesign and migrate spreadsheet solutions to run on the Microsoft Power Platform. Within this process we will identify extended capabilities for integration, optimization and further automation.

Increase data quality, security, and efficiency

Integrate solutions with other Applications

Create reliable, automated reporting

Lifting Spreadsheet Solutions to the Power Platform

We will redesign and migrate spreadsheet solutions to run on the Microsoft Power Platform. Within this process we will identify extended capabilities for integration, optimization and further automation.

Increase data quality, security, and efficiency

Integrate solutions with other Applications

Create reliable, automated reporting

Use Cases

Use Cases

Use Cases

Spreadsheet "solutions" are still omnipresent, like in:

Spreadsheet "solutions" are still omnipresent, like in:

Spreadsheet "solutions" are still omnipresent, like in:

Monthly forecasting

Monthly forecasting

Timesheet entry

Timesheet entry

Quotes / Offers / Invoices

Quotes / Offers / Invoices

Asset Management

Asset Management

Financial Valuation

Financial Valuation

Industry-specific applications, for example: pressure measurements, structural engineering, electronic medical record combination, etc.

Industry-specific applications, for example: pressure measurements, structural, electronic medical record combination, etc.

Industry-specific applications, for example: pressure measurements, structural engineering, electronic medical record combination, etc.

This guide aims to explain how spreadsheet solutions increase maintenance effort, have negative monetary impacts, and then outline the process of migrating it to the Power Platform, combining Power Apps, Power Automate, and Power BI to create a sustainable, scalable, and efficient solution.

This guide aims to explain how spreadsheet solutions increase maintenance effort, have negative monetary impacts, and then outline the process of migrating it to the Power Platform, combining Power Apps, Power Automate, and Power BI to create a sustainable, scalable, and efficient solution.

How collaboration in Spreadsheets contributes to poor data quality

How collaboration in Spreadsheets contributes to poor data quality

How collaboration in Spreadsheets contributes to poor data quality

Collaboration in spreadsheet applications like Excel exacerbates the issue. Multiple editors complicate accurate data entry and interpretation because:

Data Entry Mistakes: Typos, incorrect formulas, and misalignments (1).

No Security: In it's highest form you can lock or unlock columns with a password for all editors. The lack of granularity and unauthorized access can result sin significant financial and reputational damage (1).

Lack of Validation: Errors can go unnoticed, especially in complex spreadsheets with numerous interlinked files.

Flawed business decisions and manual work to clean up erroneous spreadsheets leads to financial losses (2).

The effects of poor data quality due to spreadsheet errors can be immense. Take the following examples:

Collaboration in spreadsheet applications like Excel exacerbates the issue. Multiple editors complicate accurate data entry and interpretation because:

Data Entry Mistakes: Typos, incorrect formulas, and misalignments (1).

No Security: In it's highest form you can lock or unlock columns with a password for all editors. The lack of granularity and unauthorized access can result sin significant financial and reputational damage (1).

Lack of Validation: Errors can go unnoticed, especially in complex spreadsheets with numerous interlinked files.

Flawed business decisions and manual work to clean up erroneous spreadsheets leads to financial losses (2).

The effects of poor data quality due to spreadsheet errors can be immense. Take the following examples:

$10.5m

Excel typo

An employee in Bulgaria intended to refund an Australian customer $100, but made a typo in one cell resulting in a refund of $10.47m (3).

10,000

non-existent seat tickets

... were sold by the London Olympics, due to a spreadsheet error (4).

$24m

Copy-Paste error

TransAlta bid $24m on a electricity transmission contract (4).

In a broader view the most significant impacts are financial losses and efficiency decrease:

In a broader view the most significant impacts are financial losses and efficiency decrease:

20-30%

Operational Expenses

... are related to bad data. And the data quality affects 20% of labor productivity (5).

~60%

Validate data manually

Data teams are less productive because they don’t trust data from spreadsheets (6).

39%

Don't track bad data losses

The execution of operations and strategy. Operational and strategic process groups should be optimized and automated.

Organizations that don't measure these losses are doomed to become less competitive over time, due to the overhead in validation, communication, verification, technical troubleshooting of spreadsheets, etc.

Organizations that don't measure these losses are doomed to become less competitive over time, due to the overhead in validation, communication, verification, technical troubleshooting of spreadsheets, etc.

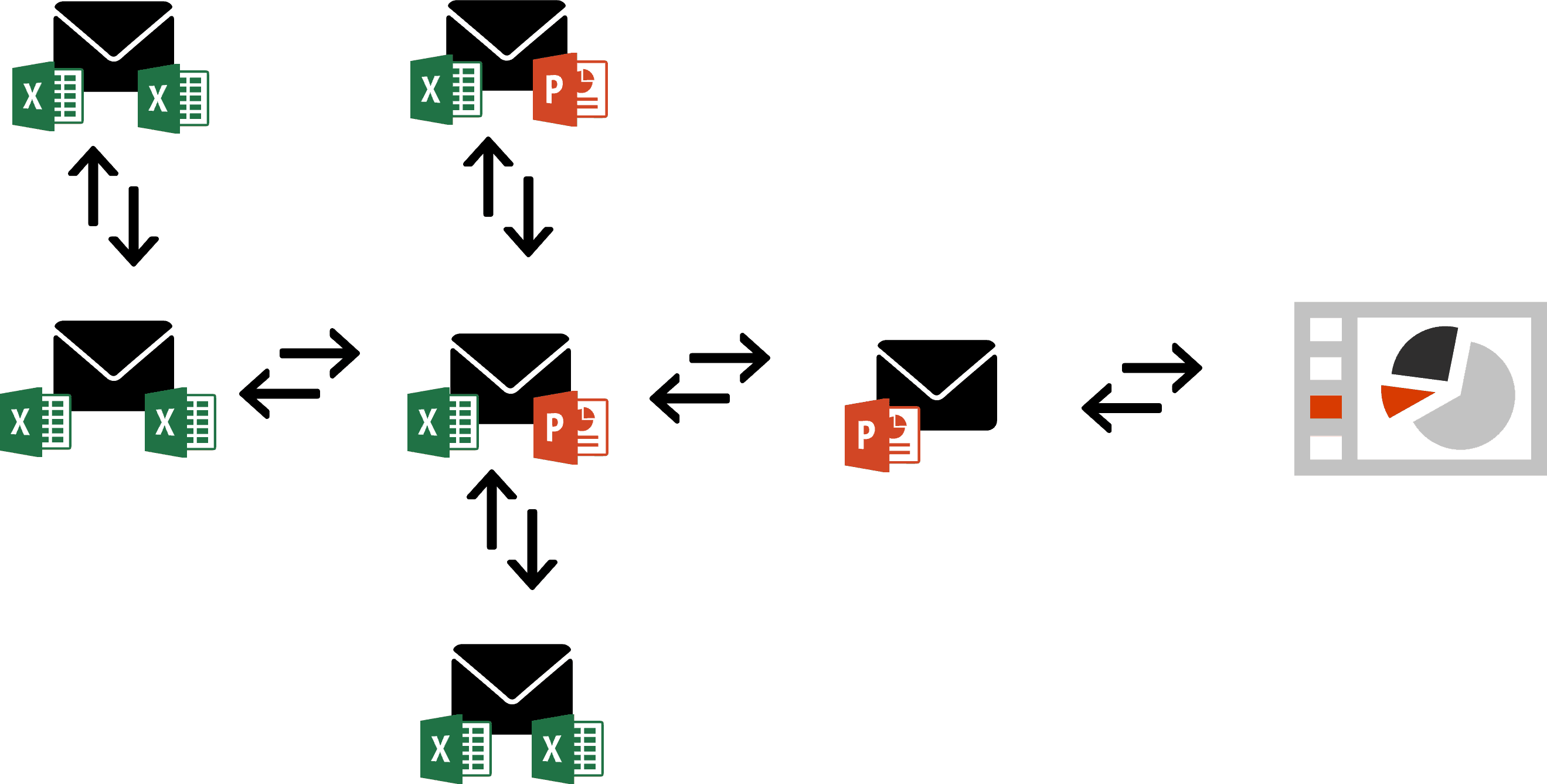

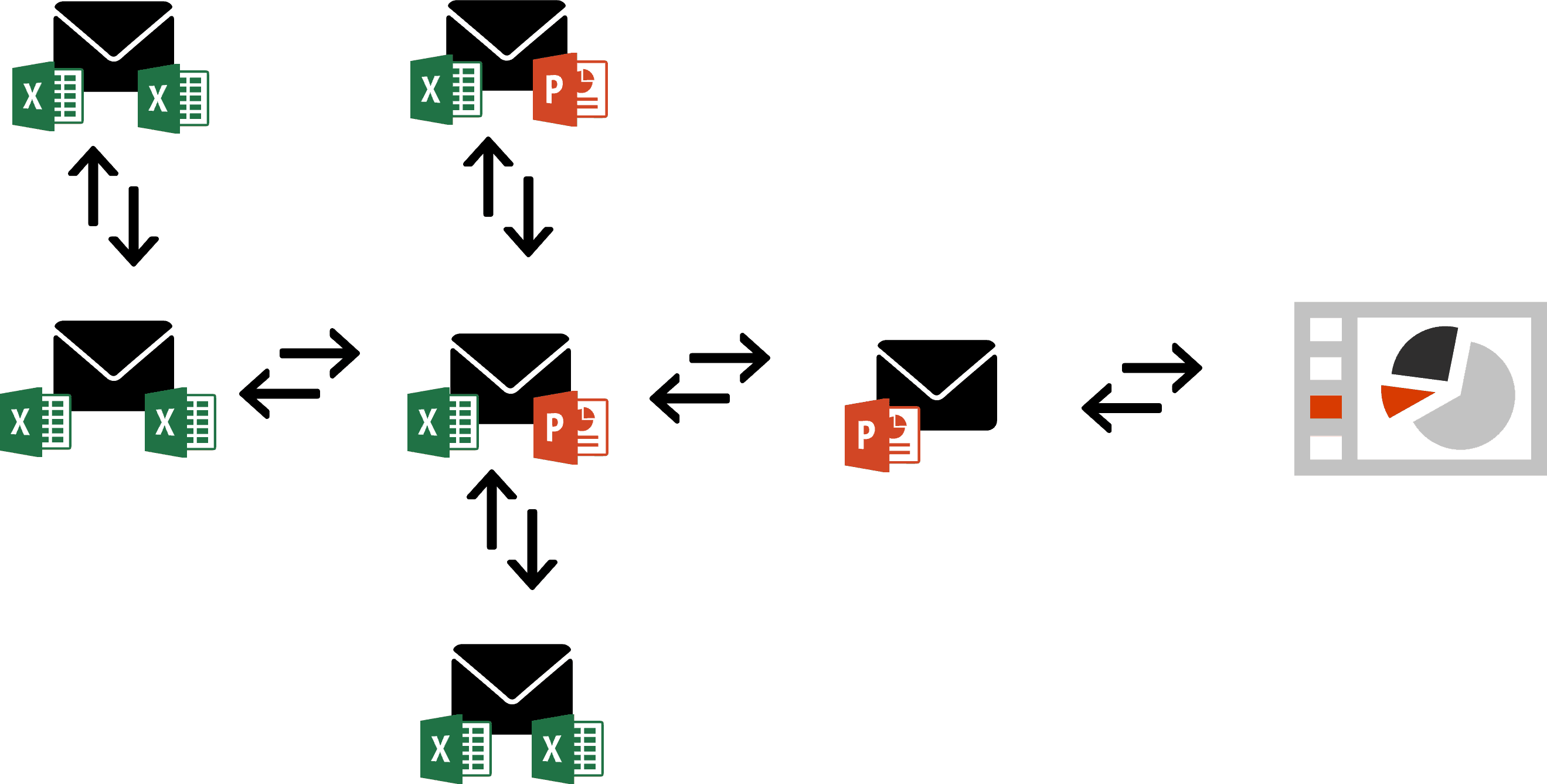

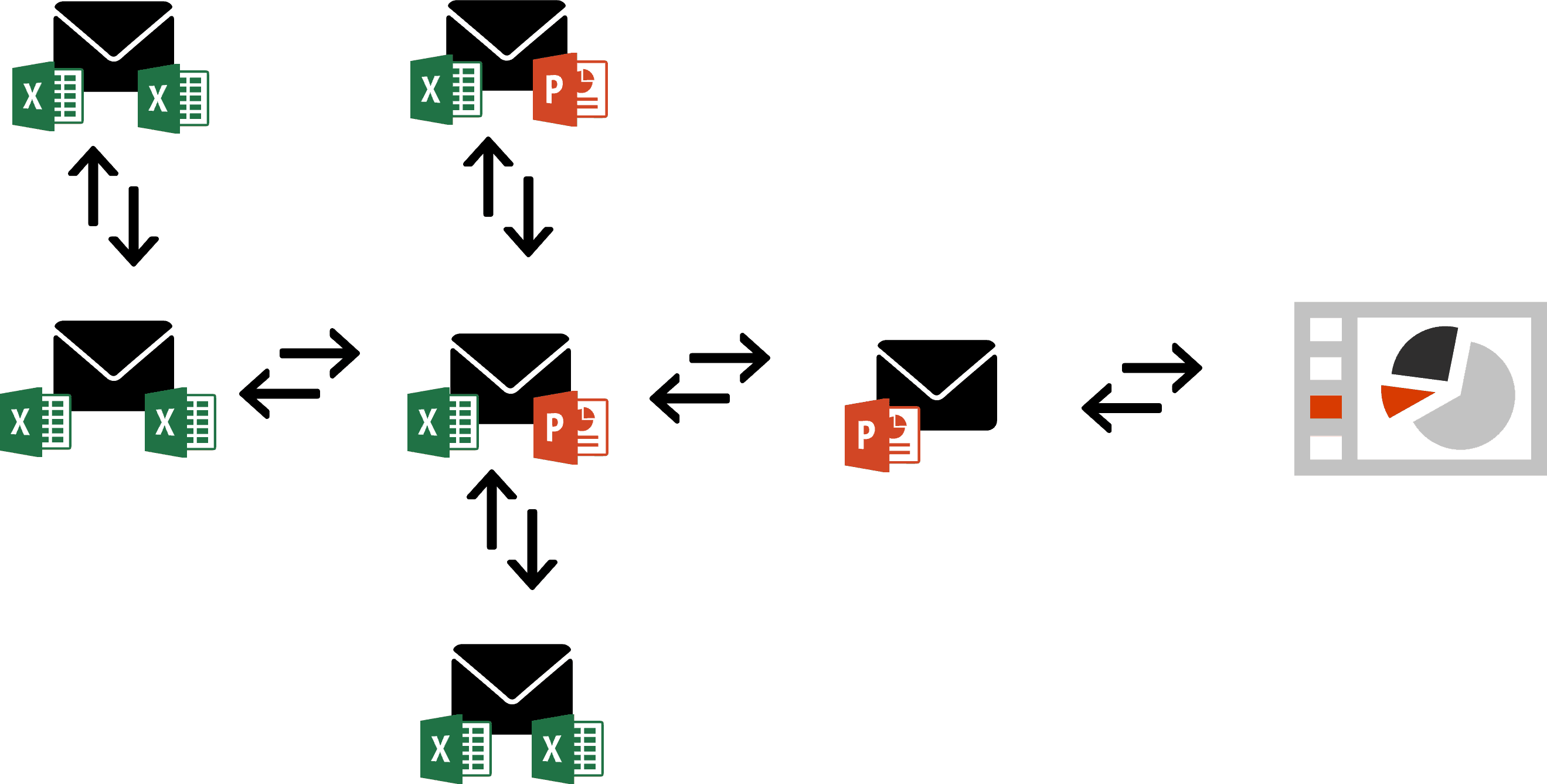

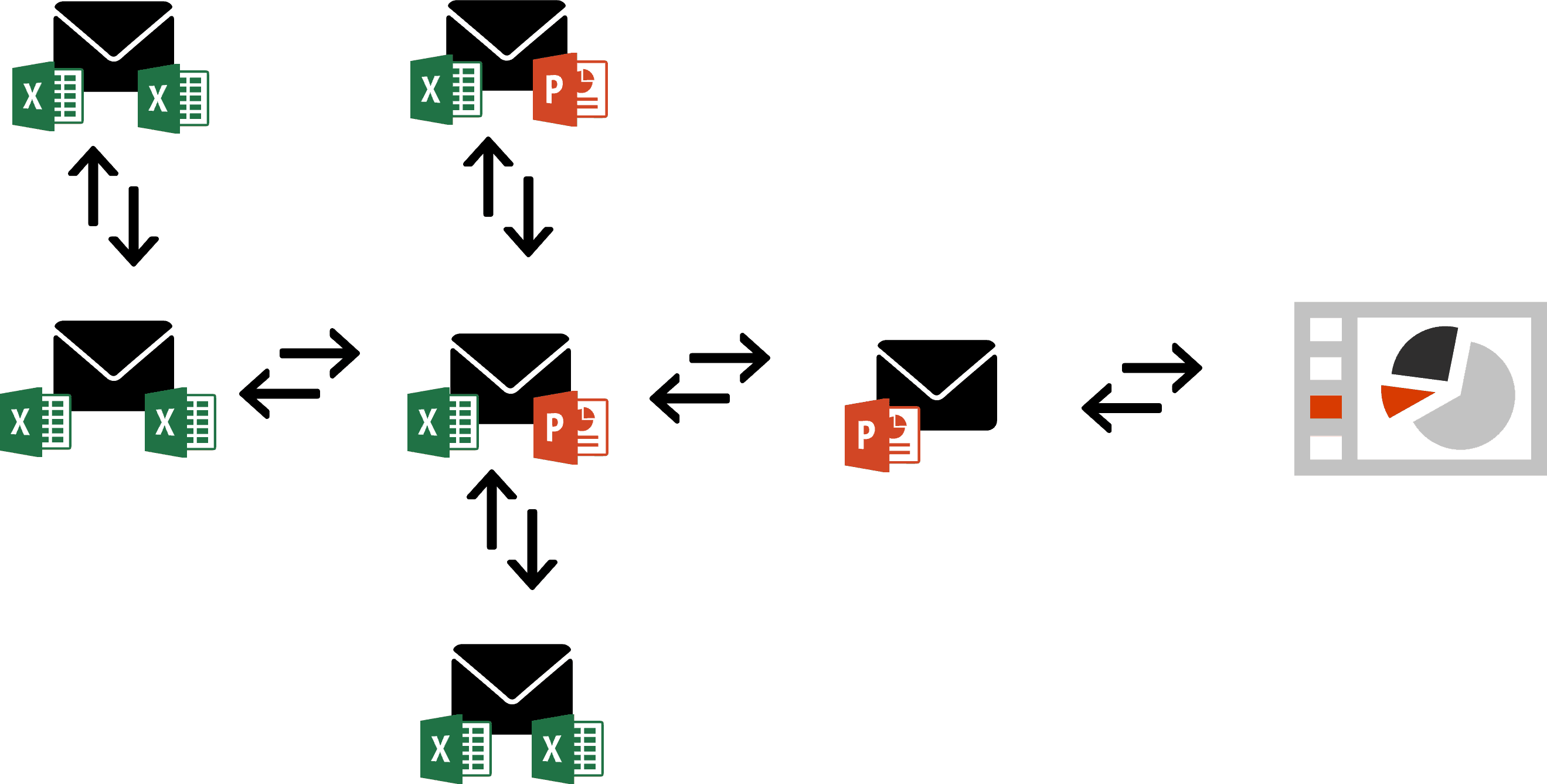

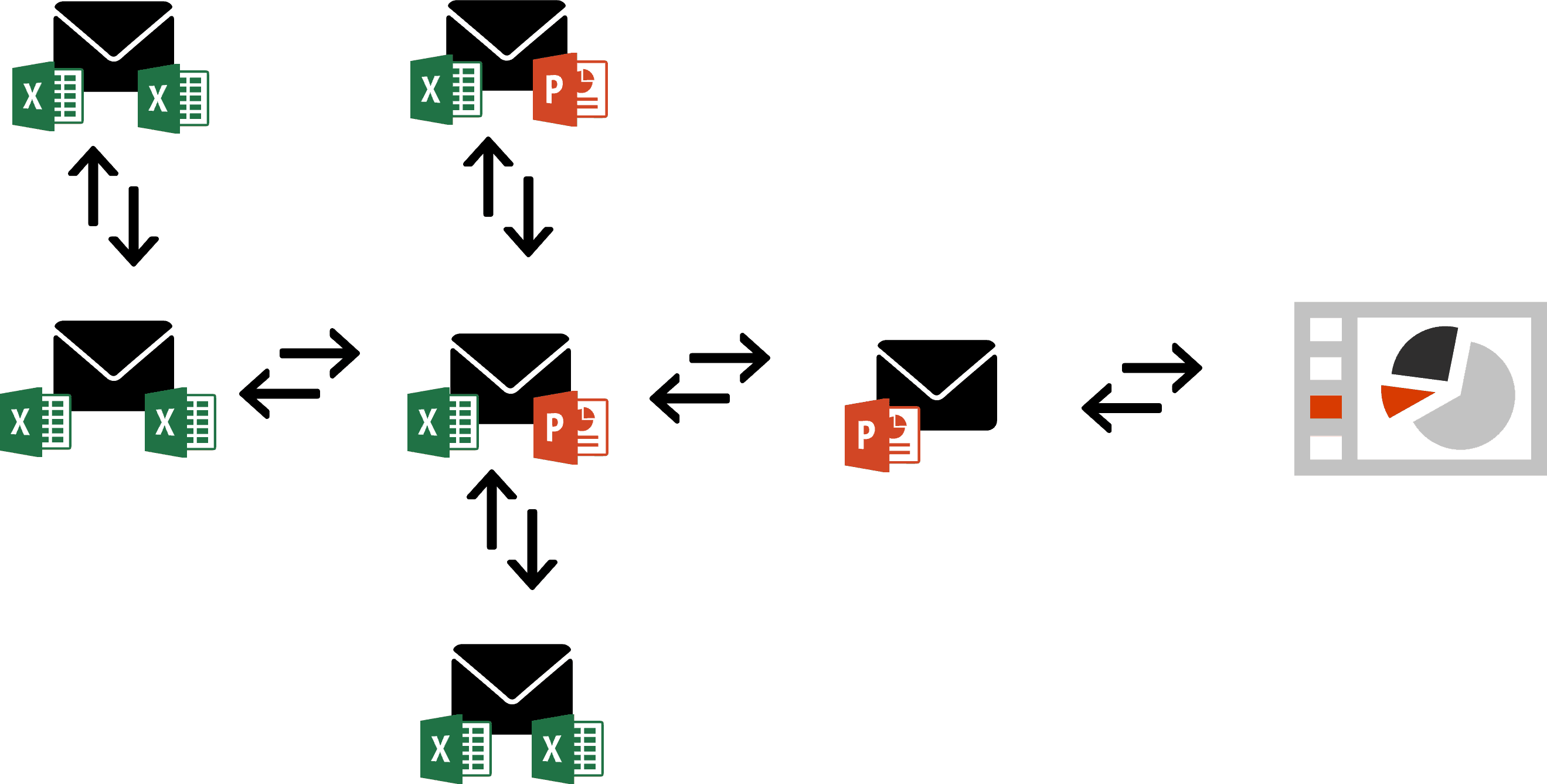

Problematic Collaboration Scenarios

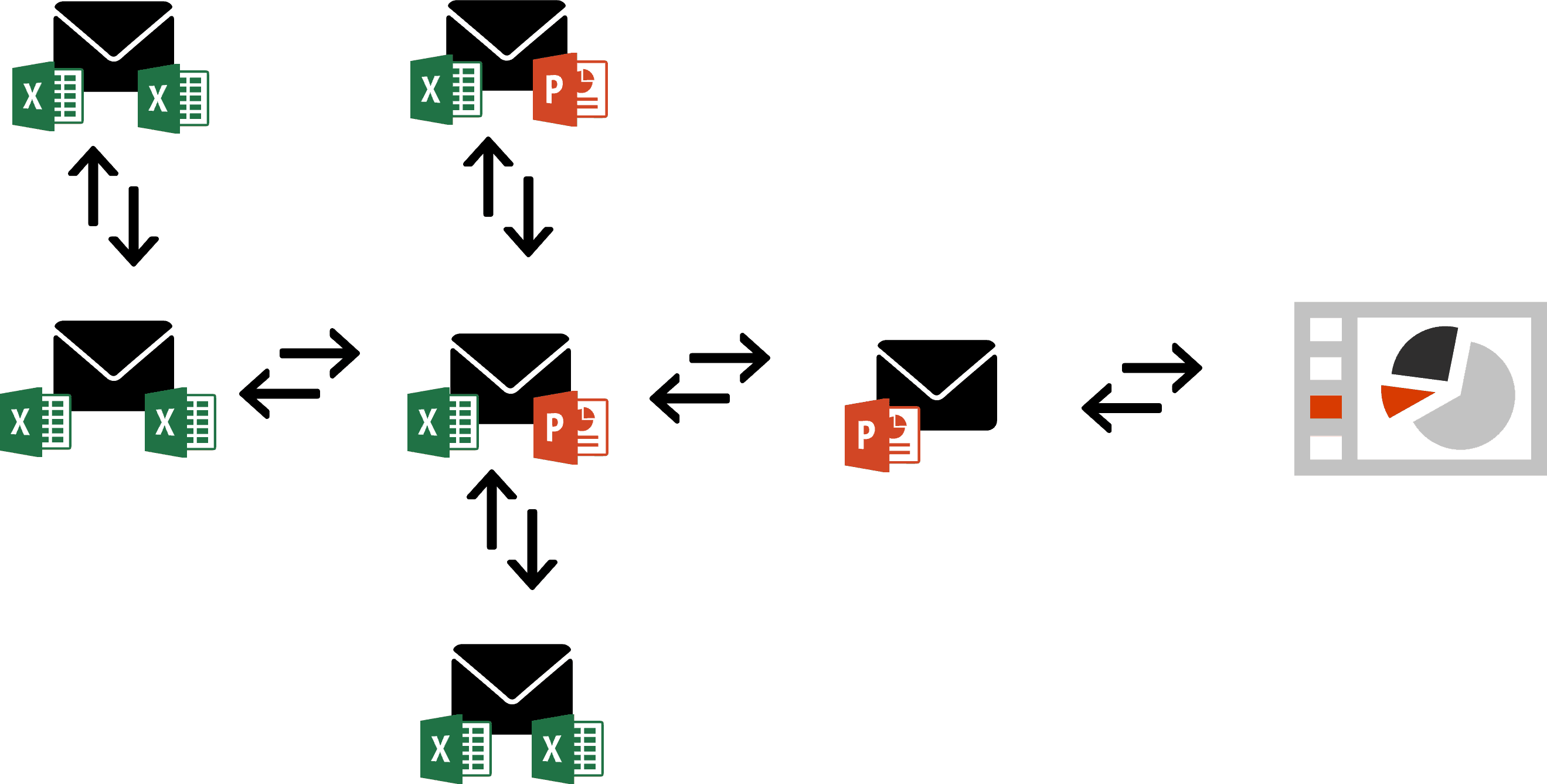

Let's take a closer look at how organizations collaborate with spreadsheets, based on examples operating with the Microsoft Office Product Family.

Let's take a closer look at how organizations collaborate with spreadsheets, based on examples operating with the Microsoft Office Product Family.

Scenario 1: The Email Chain Jungle

Scenario 1: The Email Chain Jungle

The data from different Excel files is being sent around and eventually copied into the final format for reporting, which could be PowerPoint, but also ingested into Power BI reports, Tableau, or any other digital reporting application. Data might get stale at different points throughout the consolidation process. The delay to validate and trust the data takes time because of multiple stakeholders.

While this might not impact reports that can have a 5-10 day delay (like quarterly reports), any attempt to pro-actively manage this data more frequently will create a massive overhead.

The data from different Excel files is being sent around and eventually copied into the final format for reporting, which could be PowerPoint, but also ingested into Power BI reports, Tableau, or any other digital reporting application. Data might get stale at different points throughout the consolidation process. The delay to validate and trust the data takes time because of multiple stakeholders.

While this might not impact reports that can have a 5-10 day delay (like quarterly reports), any attempt to pro-actively manage this data more frequently will create a massive overhead.

Scenario 2: File Version Chaos / Overwriting Risk

Scenario 2: File Version Chaos / Overwriting Risk

Here, the final versions of the data as well as the accompanying report or presentation are centralized in a Team. Teams is used as a collaborative tool, and access might be given on the Team level, the channel level, the tab, the folder, or the file level. This could introduce security vulnerabilities. Additionally, Excel security only exists on columns and is configured with a single password (not by stakeholder group). The lack of granular control over security increases the likelihood that collaborators slip into the wrong row/column/cell that was supposed to be filled out by a colleague.

While version control is enabled, frequent usage on a spreadsheet involving 10+ collaborators increases maintenance to identify and roll back unwanted changes.

Further, the "technical administrator" has to secure the final version, which means taking away everyone's write access and/or copying it into a final location.

Finally, in similarly layed out scenarios involving financials security has another culprit. Anyone with "write" access will be able to read the whole file. Therefore, confidential data has to be separated out into another spreadsheet or location, even though it could follow the same rules and calculations. Aggregates will need to be composed for different stakeholder groups in reporting, which increases maintenance cost. It takes away time from the team to focus on value-added work.

But the inherent risk of using spreadsheets for collaboration lies in the structure of a spreadsheet itself.

Here, the final versions of the data as well as the accompanying report or presentation are centralized in a Team. Teams is used as a collaborative tool, and access might be given on the Team level, the channel level, the tab, the folder, or the file level. This could introduce security vulnerabilities. Additionally, Excel security only exists on columns and is configured with a single password (not by stakeholder group). The lack of granular control over security increases the likelihood that collaborators slip into the wrong row/column/cell that was supposed to be filled out by a colleague.

While version control is enabled, frequent usage on a spreadsheet involving 10+ collaborators increases maintenance to identify and roll back unwanted changes.

Further, the "technical administrator" has to secure the final version, which means taking away everyone's write access and/or copying it into a final location.

Finally, in similarly layed out scenarios involving financials security has another culprit. Anyone with "write" access will be able to read the whole file. Therefore, confidential data has to be separated out into another spreadsheet or location, even though it could follow the same rules and calculations. Aggregates will need to be composed for different stakeholder groups in reporting, which increases maintenance cost. It takes away time from the team to focus on value-added work.

But the inherent risk of using spreadsheets for collaboration lies in the structure of a spreadsheet itself.

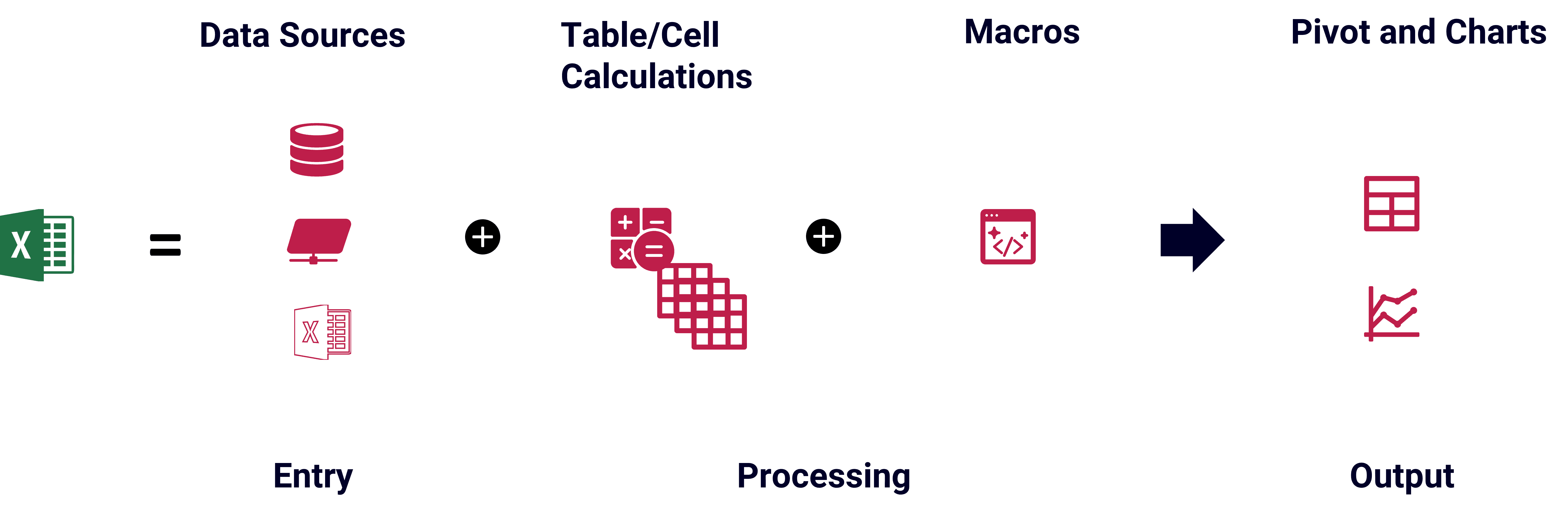

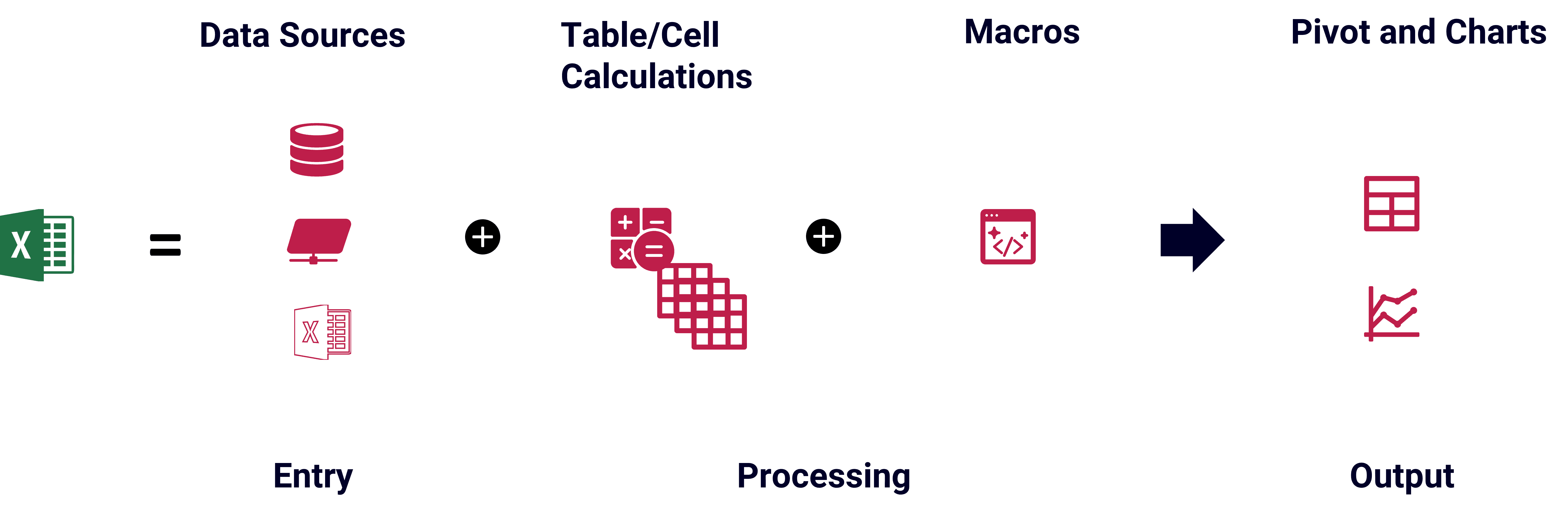

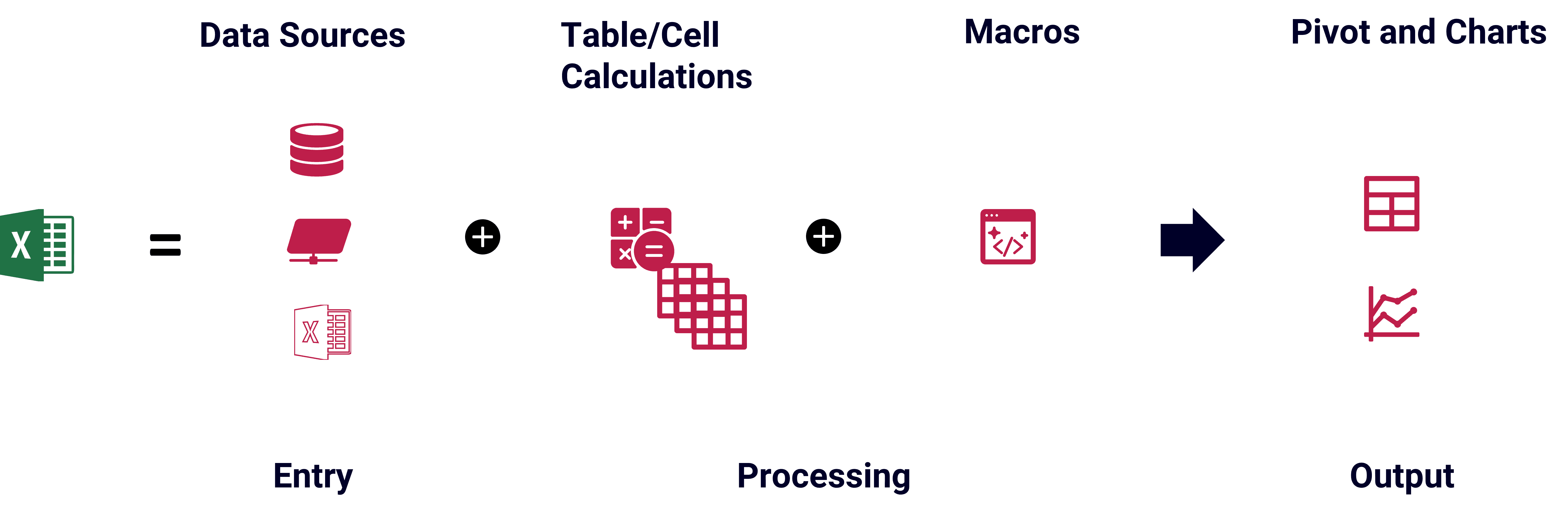

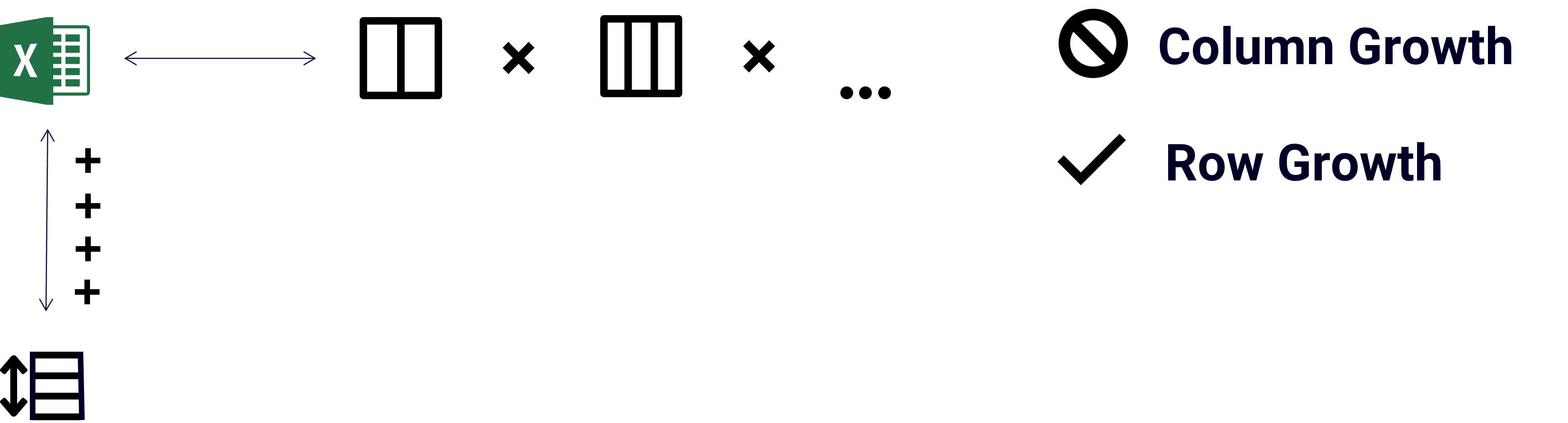

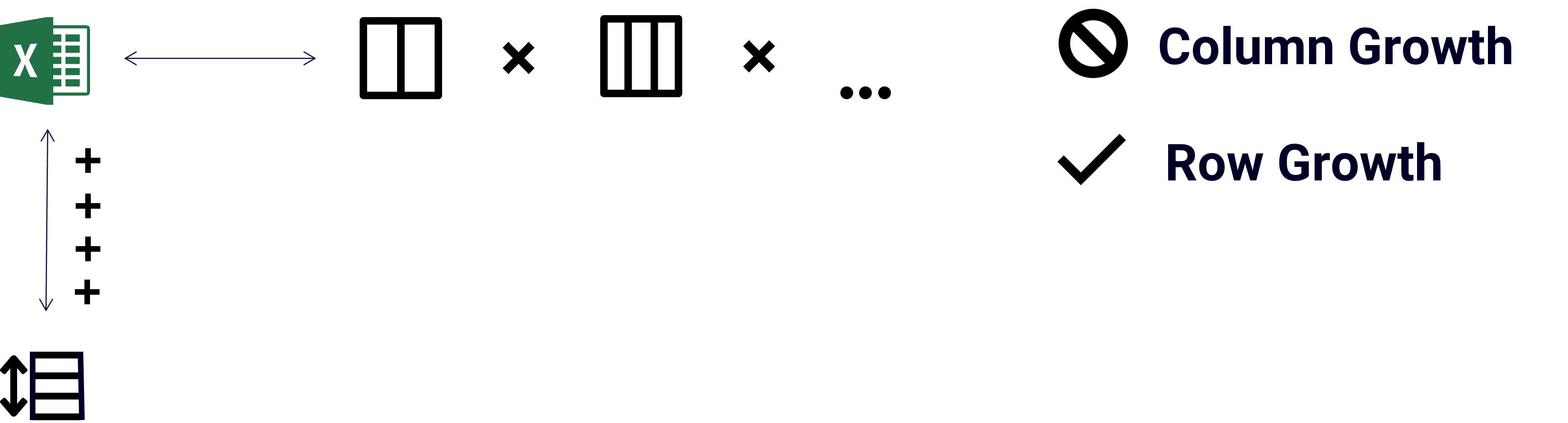

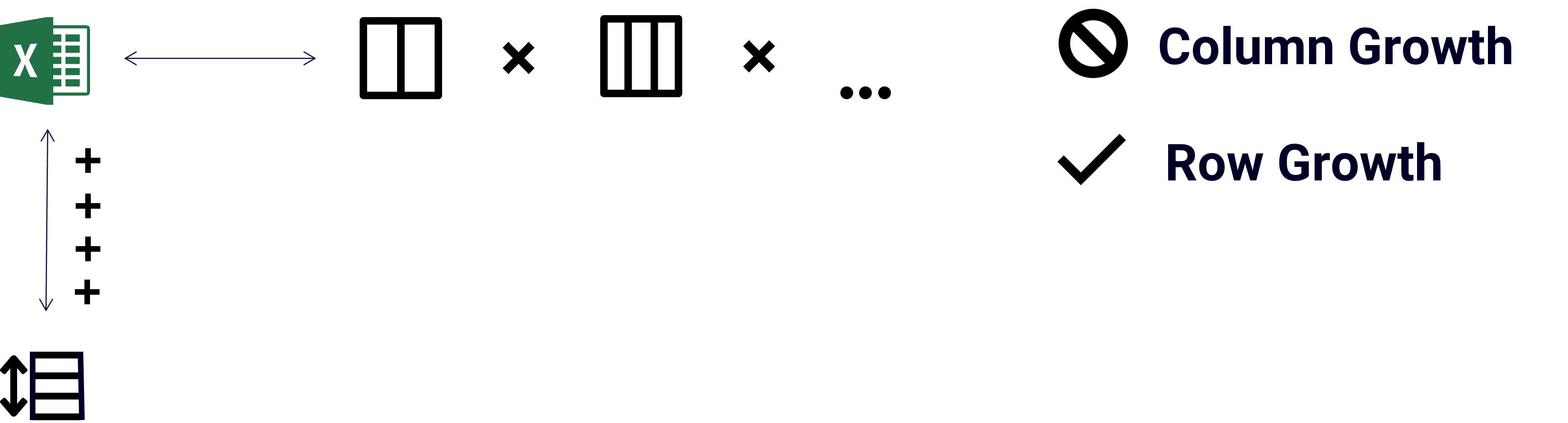

Anatomy of a Spreadsheet

Anatomy of a Spreadsheet

Imaging spreadsheets as an application with a process consisting of three major parts: entering data, processing data, and displaying or saving the result. The data entry in a spreadsheet is manually entered data as well as its data sources, which can be anything (referencing information in the same file on a hidden sheet; other spreadsheets; other relational databases; APIs).

The incoming data is shaped in rows and columns. The editor of the spreadsheet manipulates the cells to solve for the desired objective - for example summing up money by month or by any other category; aggregating quantities for line items in invoices and quotes; complex modeling of engineering or chemical processes, like tank pressure, forecasting chemical compound viscosity, etc. The manipulations are done on the cells themselves or created as new columns and rows. In addition, you can record or code macros to repeat complex operations on the spreadsheet more conveniently and rule-based.

The final output is the most interesting element, because it is supposed to be interpreted unambiguously and support data-driven decision-making. Meaning, the consumers of the output have to trust that entry and processing happened transparently, efficiently, securely and with the least chance for error. Herein lies the crux.

Imaging spreadsheets as an application with a process consisting of three major parts: entering data, processing data, and displaying or saving the result. The data entry in a spreadsheet is manually entered data as well as its data sources, which can be anything (referencing information in the same file on a hidden sheet; other spreadsheets; other relational databases; APIs).

The incoming data is shaped in rows and columns. The editor of the spreadsheet manipulates the cells to solve for the desired objective - for example summing up money by month or by any other category; aggregating quantities for line items in invoices and quotes; complex modeling of engineering or chemical processes, like tank pressure, forecasting chemical compound viscosity, etc. The manipulations are done on the cells themselves or created as new columns and rows. In addition, you can record or code macros to repeat complex operations on the spreadsheet more conveniently and rule-based.

The final output is the most interesting element, because it is supposed to be interpreted unambiguously and support data-driven decision-making. Meaning, the consumers of the output have to trust that entry and processing happened transparently, efficiently, securely and with the least chance for error. Herein lies the crux.

Data Entry

Data Entry

To the editor of the Excel spreadsheet the data sources might be writeable or read-only. Because many applications are designed with connections and add-ins for Excel, which can result in a direct manipulation of data to the source, given user permissions. This opens up a whole new level of complications when validating data from spreadsheets that reference multiple sources, because it begs the questions:

Do all collaborators on the master sheet have the same permissions to all the data sources to refresh the spreadsheet properly?

How is security handled on the data source? Who else could change data that flows into the master spreadsheet we are working on?

If data gets changed or corrupted at the source, what mechanisms are in place to identify, roll back the changes, and put governance and controls in place to prevent the same case from happening again?

To the editor of the Excel spreadsheet the data sources might be writeable or read-only. Because many applications are designed with connections and add-ins for Excel, which can result in a direct manipulation of data to the source, given user permissions. This opens up a whole new level of complications when validating data from spreadsheets that reference multiple sources, because it begs the questions:

Do all collaborators on the master sheet have the same permissions to all the data sources to refresh the spreadsheet properly?

How is security handled on the data source? Who else could change data that flows into the master spreadsheet we are working on?

If data gets changed or corrupted at the source, what mechanisms are in place to identify, roll back the changes, and put governance and controls in place to prevent the same case from happening again?

Data Processing

Data Processing

Excel behaves like a relational database. It is optimized for row operations. When adding rows the computation is closer to "addition". In contrast, when adding columns the computation is closer to "multiplication", which is more complex. On top of that, adding or changing column names or order comes with headaches of adjusting all dynamic outputs (like Power BI reports). While you can have (and should have!) a unique identifier per rows, columns in Excel can only be referenced by name and order. This creates massive complications when reporting the data out, as changes to spreadsheet structure can happen at will of the editor or collaborators.

Another factor to consider are macros to process bulk data. Those can not only introduce security vulnerabilities, install viruses through a backdoor, reach out into the internet, etc. but also corrupt the data if any connection is not cleanly identifiable or refreshable. Although creating macros by professional coders might help with error handling, the code lives inside the file and can therefore be considered a security risk.

Excel behaves like a relational database. It is optimized for row operations. When adding rows the computation is closer to "addition". In contrast, when adding columns the computation is closer to "multiplication", which is more complex. On top of that, adding or changing column names or order comes with headaches of adjusting all dynamic outputs (like Power BI reports). While you can have (and should have!) a unique identifier per rows, columns in Excel can only be referenced by name and order. This creates massive complications when reporting the data out, as changes to spreadsheet structure can happen at will of the editor or collaborators.

Another factor to consider are macros to process bulk data. Those can not only introduce security vulnerabilities, install viruses through a backdoor, reach out into the internet, etc. but also corrupt the data if any connection is not cleanly identifiable or refreshable. Although creating macros by professional coders might help with error handling, the code lives inside the file and can therefore be considered a security risk.

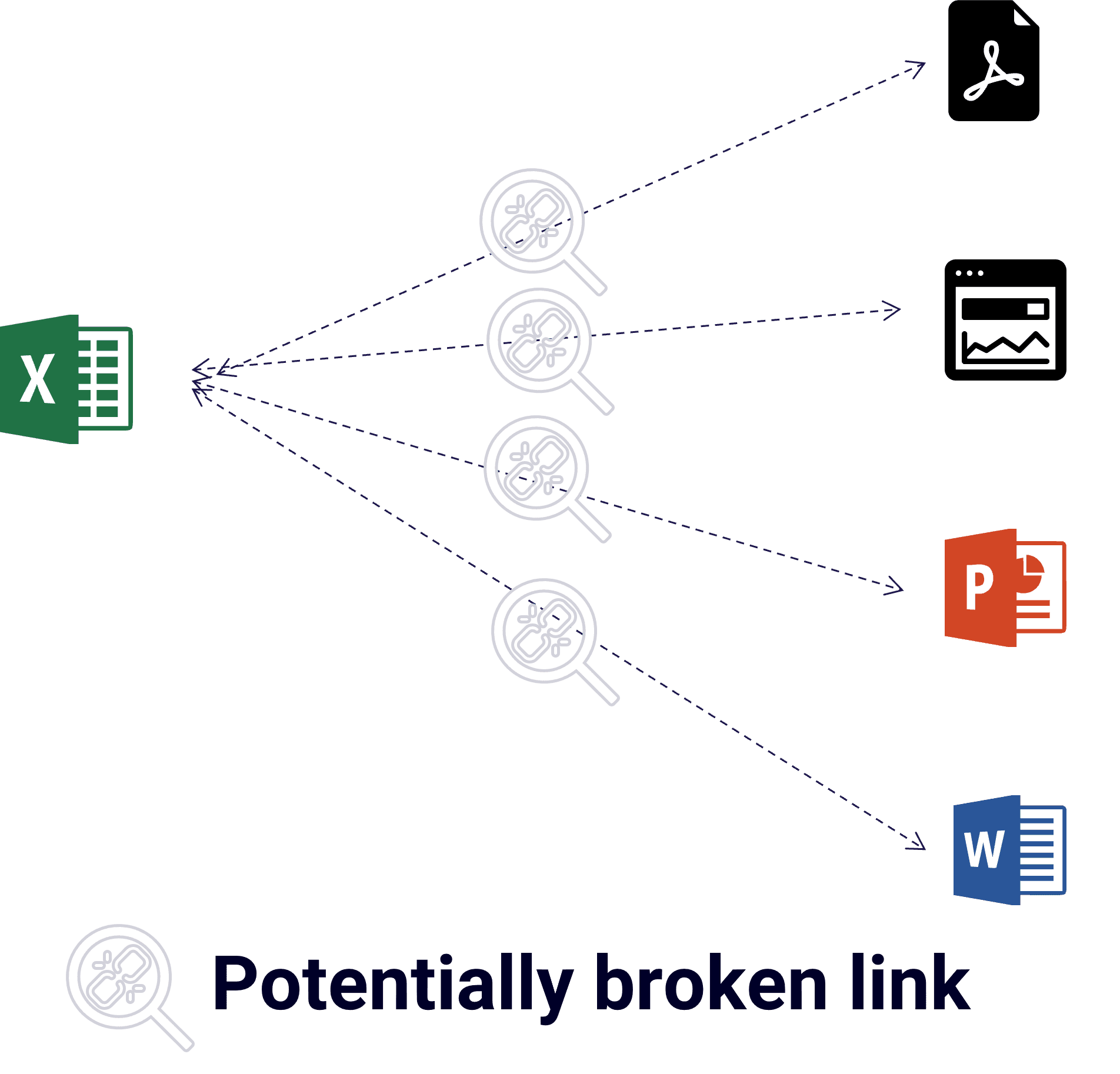

Data Output

Data Output

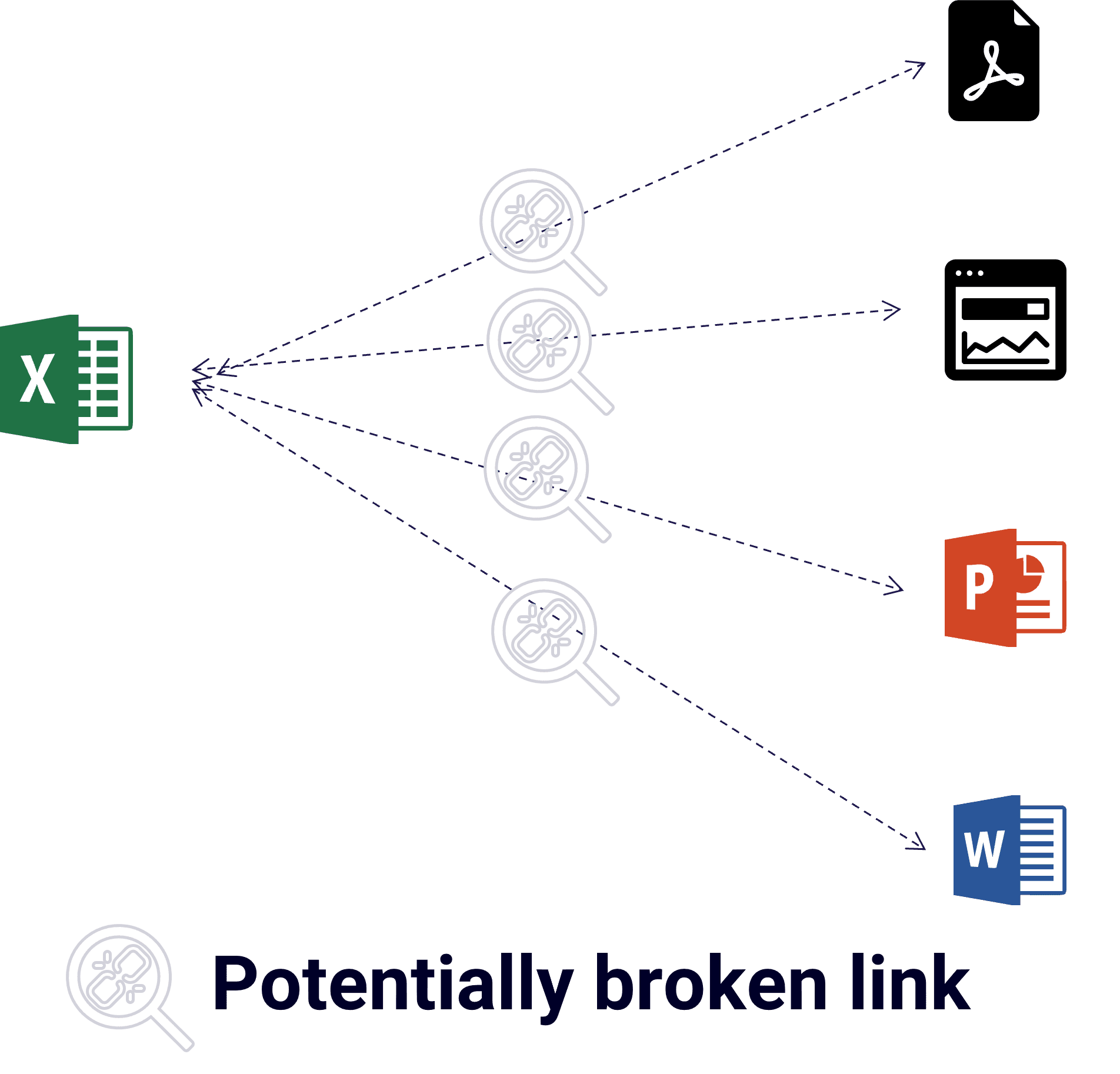

Because Excel is not a dedicated reporting tool like Power BI or Tableau, changes can be made to the data later. When Excel is the source of the calculations and is used in documents, presentations, or anything other entity that is open for editing, the actuality of the data, the sources, and calculations can be called into question.

Finally, the links to or from the spreadsheet can break anytime the file is moved or security the location is tampered with.

Lift and shift to the Power Platform: Efficient, scalable, and fast to implement

Lift and shift to the Power Platform: Efficient, scalable, and fast to implement

The Microsoft Power Platform separates concerns of data entry, data processing, and data output in three distinct applications with benefits, that stack on top of each other when used in combination. Additionally, business process modeling and visual integration with Microsoft Visio shorten the time to model, document, and maintain the specifics of the solution.

The Microsoft Power Platform separates concerns of data entry, data processing, and data output in three distinct applications with benefits, that stack on top of each other when used in combination. Additionally, business process modeling and visual integration with Microsoft Visio shorten the time to model, document, and maintain the specifics of the solution.

The Microsoft Power Platform separates concerns of data entry, data processing, and data output in three distinct applications with benefits, that stack on top of each other when used in combination. Additionally, business process modeling and visual integration with Microsoft Visio shorten the time to model, document, and maintain the specifics of the solution.

Data Entry in Power Apps

Efficient time-to-market.

Security integrated with Microsoft Entra ID.

Responsive and adaptive design.

Connects to every data source.

Least amount of maintenance.

Process in Power Automate

Approval processes, integrated with Teams and Outlook.

Form processing & data mining.

Complex operations and calculations.

Outputs Excel, Word, etc. as final documents.

Reporting in Power BI

Predictive Modeling

Integration from multiple data sources (SAP, Salesforce, ADP, all of Microsoft, custom APIs, etc.)

The large advantage is the granularity of control that can be given over each element of the solution while efficiently handling security. With proper design and decisions to simplify solutions based on the Power Platform are scalable indefinitely.

The large advantage is the granularity of control that can be given over each element of the solution while efficiently handling security. With proper design and decisions to simplify solutions based on the Power Platform are scalable indefinitely.

The large advantage is the granularity of control that can be given over each element of the solution while efficiently handling security. With proper design and decisions to simplify solutions based on the Power Platform are scalable indefinitely.

Steps to lift spreadsheet solutions to the Power Platform

Steps to lift spreadsheet solutions to the Power Platform

The following activities will give you what you need to migrate any Excel "application" or "tool" into the Power Platform.

The following activities will give you what you need to migrate any Excel "application" or "tool" into the Power Platform.

Reporting on data from new applications that are well-designed and migrated to the Power Platform can be created and maintained efficiently. The big lift is the design of the tables, columns, their relationships, and the App itself. Once implemented, reporting in Power BI becomes a breeze, because all relationships and columns are set in the source and don't need to be arduously created in the Power BI data model. That makes maintenance efficient and lets the report developer focus on the user experience, visuals, and navigation. The bigger questions in reporting relate to integration and infrastructure:

Integration might include more data sources that now can be reliably combined into a set of reports, a reporting application, or a dashboard with self-service capabilities.

Infrastructure means the assessment, design, and implementation of licensing, sharing, security, features, deployment pipeline, capacity usage cost, end-user training, developer training and more. Larger organizations tend to centralize reporting administration by creating a Center of Excellence for Reporting (Coe) and a Community for Insights (CFI) that supports and shares knowledge about the availability of reports, report creation, security best practices in accordance with company governance and compliance policies, etc.

Reporting on data from new applications that are well-designed and migrated to the Power Platform can be created and maintained efficiently. The big lift is the design of the tables, columns, their relationships, and the App itself. Once implemented, reporting in Power BI becomes a breeze, because all relationships and columns are set in the source and don't need to be arduously created in the Power BI data model. That makes maintenance efficient and lets the report developer focus on the user experience, visuals, and navigation. The bigger questions in reporting relate to integration and infrastructure:

Integration might include more data sources that now can be reliably combined into a set of reports, a reporting application, or a dashboard with self-service capabilities.

Infrastructure means the assessment, design, and implementation of licensing, sharing, security, features, deployment pipeline, capacity usage cost, end-user training, developer training and more. Larger organizations tend to centralize reporting administration by creating a Center of Excellence for Reporting (Coe) and a Community for Insights (CFI) that supports and shares knowledge about the availability of reports, report creation, security best practices in accordance with company governance and compliance policies, etc.

Reporting on data from new applications that are well-designed and migrated to the Power Platform can be created and maintained efficiently. The big lift is the design of the tables, columns, their relationships, and the App itself. Once implemented, reporting in Power BI becomes a breeze, because all relationships and columns are set in the source and don't need to be arduously created in the Power BI data model. That makes maintenance efficient and lets the report developer focus on the user experience, visuals, and navigation. The bigger questions in reporting relate to integration and infrastructure:

Integration might include more data sources that now can be reliably combined into a set of reports, a reporting application, or a dashboard with self-service capabilities.

Infrastructure means the assessment, design, and implementation of licensing, sharing, security, features, deployment pipeline, capacity usage cost, end-user training, developer training and more. Larger organizations tend to centralize reporting administration by creating a Center of Excellence for Reporting (Coe) and a Community for Insights (CFI) that supports and shares knowledge about the availability of reports, report creation, security best practices in accordance with company governance and compliance policies, etc.

Conclusion

Conclusion

Conclusion

Security and efficiency are the most common drivers to lift and shift Excel sheets, tools, or full spreadsheet applications and workflows to a low-code platform like the Power Platform. The fear of inaccurate data, the effects on maintenance of data and correcting data mistakes that are done in isolation by individuals are other reasons that is driving cloud adoption. Finally, leadership wants to see reliable 360 views of all data. Trust in data is hardly possible with spreadsheets.

References

1) Data Ladder. “The Impact of Poor Data Quality: Risks, Challenges, and Solutions.”, 2023. Accessed 15 May 2024, Weblink.

2) F1F9. "Spreadsheet Blunders Costing Business Billions." CNBC, 30 July 2013, Weblink.

3) Apparity. "Spreadsheet Error Causes a $10.5M Mistake.", 24 January 2022, Weblink.

4) IBM. "Data Science and AI Elite Team: Overview.", 2021, Weblink.

5) Pragmatic Works. "The Cost of Bad Data Infographic." 11 July 2022, Weblink.

6) Datafold. "The State of Data Quality in 2021." 2021, Weblink.